Unreal Realm

Despite the variety of device types and screen sizes, our experience rests on a handful of touch gestures (swiping, pinching, tapping) that act as the backbone of mobile interactions today. Gestural interfaces are highly cinemagic, rich with action and graphical possibilities. Additionally, they fit the stories of remote interactions that are becoming more and more relevant in the real world as remote technologies proliferate.

DESIGN QUESTION

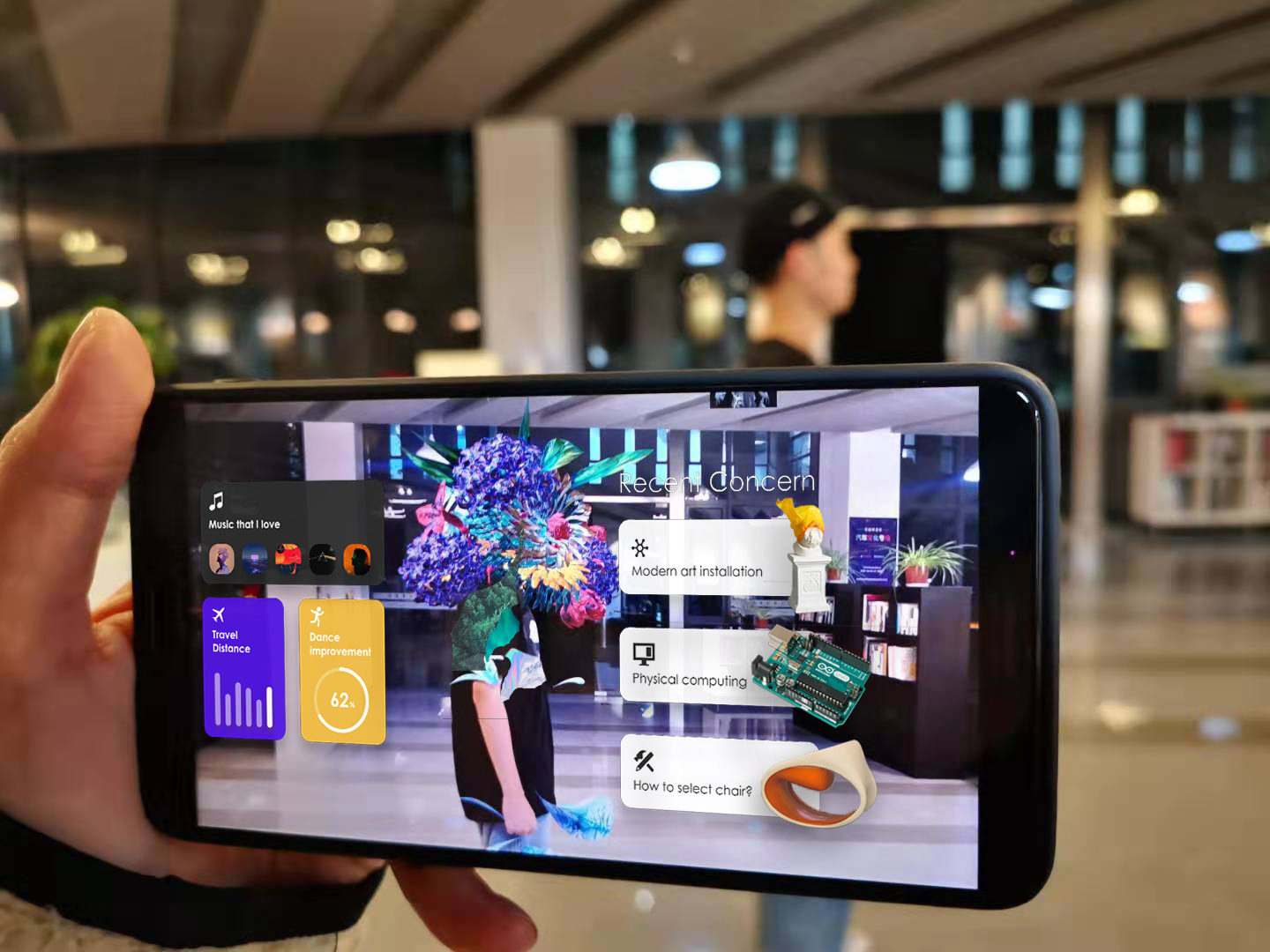

What are the foundational 3D interactions of a mixed-reality future? What are the ways through which the boundaries between spaces can be blurred? It is necessary that XR interfaces should allow for additional interactions with virtual objects, which should not only enable natural and intuitive interaction with them, but also it should help to provide seamless interactions between real and virtual objects at the same time.

This research aims to investigate how to interact with cross-space objects in XR, and how to seamlessly transition between hand gestures for conflict-free 3D digital content arrangement. We study the cross-space object interactions among screen space, AR space and physical space. In terms of screen-AR interaction, specifically, we enable cross-device object manipulation between screen and AR space. When it comes to AR-physical interaction, we design gesture-based 3D scanning that transmits physical objects into AR elements. Our demo also investigates the potential of extracting and recognizing materials from physical objects, which serve as elements for AR space creation. Lastly, within the AR context, we define gestures for object manipulation, switching and deletion that open up dialogue for alternative XR interaction design.

DESIGN IMPLEMENTATION

To see how virtual objects fit in the real space

As mentioned above, ray-casting is a grabbing technique where a light ray extends from the user’s palm. By intersecting an object with this ray and pinching the fingers, the object is attached to the ray. Users can then manipulate the object easily using wrist or arm motion. In our implementation scenario, the user raycast to the table on the screen and pinch to position it out of the screen into the real world so that the user can get a sense of how virtual objects fit in the real space.

Select virtual object

Point and select the object through raycasting

Point and select the object through raycasting

Drag the object out of screen space into real space

Envisioning a seamless transition between multiple realities

Envisioning a seamless transition between multiple realities

To manipulate a virtual copy of a physical object

3D scanning is already a developed technology nowadays. We propose that this technology combining AR will make the 3D scanning process more intuitive and efficient, as users can directly look at the object that they desire to scan and the scan result at the same time. The user first selects the ‘Scan’ function on the hand menu. By palm-pointing with five fingers close together, the user 3D-scan a real object and make a

digital copy of it in AR space.

Scan a physical object

Using a hand gesture that mimics a scanning motion

Using a hand gesture that mimics a scanning motion

A digital twin appears ready to be manipulated

Allowing seamless digital manipulations of physical objects

Allowing seamless digital manipulations of physical objects

To manipulate a virtual copy of a physical object

Two hands moving apart diagonally while pinching is often a metaphor for opening things up. We propose “pull” as the gesture to explode a 3D object in AR space. In our implementation scenario, the user is exploding the chair to have a closer look at the product’s structure details. When the user moves the two hands closer while pinching, the exploded chair is manipulated back to its original state.

Exploding gesture

Hands pulling away from each other diagonally

Hands pulling away from each other diagonally

Digital object exploded to reveal its structural components

Exploding according to the gesture movements

Exploding according to the gesture movements

Extracting material from the real space and applying them to a digital chair to customize it

The user uses an ‘OK’ sign gesture that mimics a magnifier to indicate texture on a real object. The vision inside the magnifier will be scaled up for the user to have a detailed look. We give the user the feeling of magic as such an effect cannot be achieved in real life. We use dwell time of three seconds as confirmation technique so that the interaction process will not be interrupted. During the dwell time, a loading animation would prompt up around the magnifier to indicate the confirmation process. After the material is confirmed, a material ball will be generated beside the user’s hand. At the same time, the magnifier in the finger circle will disappear. Then, the user uses ‘grab to manipulate’ that mentioned above to position the material ball onto the digital object. We use dwell time again for applying confirmation when the material ball collides with the object. At the same time, the object is being applied with the material as a preview for decision making. After three seconds of collision, the material is successfully applied to the object.

Extract real-world material

Gesturing a magnifying glass to locate and extract real-world materials

Gesturing a magnifying glass to locate and extract real-world materials

Digital object customized with real-world material

Move the material ball created from the real-world material and apply it to the virtual object

Move the material ball created from the real-world material and apply it to the virtual object

Flip through a catalogue of digital objects

Swipe is a widely used gesture in the current user interaction field. We deploy swipe as a gesture technique for the user to switch between selections in AR space.

Switch between different objects in a catalogue

Swiping gesture

Swiping gesture

Cycle through objects in-place

Switch between different catalogues of a certain object.

Switch between different catalogues of a certain object.

Deleting a virtual object

Point and shoot

Gesture mimicking a gun to indicate which object to delete.

Gesture mimicking a gun to indicate which object to delete.

Virtual object disintegrates

Object will be deleted with an explosion.

Object will be deleted with an explosion.

HAND GESTURE CATALOG

Grab to manipulate

Use five-fingers-grasp to grab a near object.

Use five-fingers-grasp to grab a near object.

Raycast and Pinch

Use palm pointing to indicate target object, then use pinch gesture to grab a distant object.

Use palm pointing to indicate target object, then use pinch gesture to grab a distant object.

Magnify and Extract

Use gesture that mimics a magnifier to indicate texture on real object.

Use gesture that mimics a magnifier to indicate texture on real object.

Scan

Use palm-pointing with five fingers close together to 3d-scan a real object.

Use palm-pointing with five fingers close together to 3d-scan a real object.

Delete

Point and shoot

Point and shoot

Flip to show menu

Flip the left hand to show a menu anchored to it

Flip the left hand to show a menu anchored to it

Press a button

Use finger to press a virtual button on the menu

Use finger to press a virtual button on the menu

Pull to explode

Pull both hands away from each other diagonally to explode the object

Pull both hands away from each other diagonally to explode the object

FUTURE WORK

For near future work, we plan to conduct a within-subjects user study to investigate the elaborated sets of interaction techniques for cross-space content manipulation tasks. Since a seamless set of hand gesture interaction techniques for cross-space content selection and manipulation has not been evaluated before, we are particularly interested in finding more about the practicality and consistency of the proposed techniques for these interaction tasks. For this, we design cross-level tasks with varied target sizes and respective destination areas to investigate whether the combined interaction techniques bring seamless transition between different spaces.

We mainly aim to receive both qualitative and quantitative user feedback to gain further insights into hand gesture interaction for cross-space workspace tasks. As a result, the goal of this evaluation was not to beat a certain baseline, but rather to see how people cope with the newly developed approaches for completing a given cross-space content manipulation task.

For a long term future plan, we aim to establish a database platform that collects XR hand gesture catalogs. This platform aims to provide reference for universal XR user experience design. We build this platform to allow for XR UX design community development among artistic, industrial, and academic usage.

We mainly aim to receive both qualitative and quantitative user feedback to gain further insights into hand gesture interaction for cross-space workspace tasks. As a result, the goal of this evaluation was not to beat a certain baseline, but rather to see how people cope with the newly developed approaches for completing a given cross-space content manipulation task.

For a long term future plan, we aim to establish a database platform that collects XR hand gesture catalogs. This platform aims to provide reference for universal XR user experience design. We build this platform to allow for XR UX design community development among artistic, industrial, and academic usage.